I’m sure it started with YouTube. I’d been interested in playing around with visuals to accompany music, in particular live jamming, for awhile a tutorial somewhere must have grabbed my attention and set me on the path of experimenting with audio reactive real-time image generation.

I had seen the tutorial below, which is pretty cool,

but ended up starting to make stuff with TouchDesigner and StreamDiffusionTD by following this one from Scott Mann a little while later.

Some time later, I ended up making this, and it was freaking fun!

Since that first video I’ve done a bunch more and started using a hosted GPU backend via the Daydream API. You can play around with Daydream in a browser here and it’s pretty wild. Highly recommend going and having a play.

Some of my early experiments were a bit frenetic, with rapidly changing images, but I still find them mesmerizing to watch, like zooming into a fractal. For whatever reason, this one has gotten the most views.

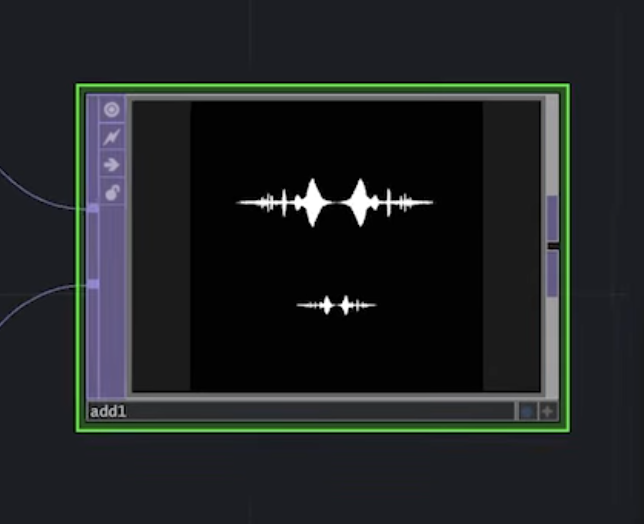

It’s still using the same structure, a spectrogram mirror and doubled, to drive StreamDiffusion.

I made some variations on how the spectrogram was used and ended up with things like this where things are looking a little more geometric.

I quite like this one, where things dipped into a hand drawn feel at times

and this one, as it’s a slower and really has that quality of earlier image models that I find interesting, where there is a lot of ‘not quite’ going on, a ‘almost-becoming’.

There’s a new version of StreamDiffusionTD out but it’s crashing on my mac but it should be significantly higher quality once it’s working. Quite excited for that.

Leave a Reply